Everyone always hopes to have a complete data-frame, whose features boast a homogeneous depth of data, in order to be able to lay a solid foundation in order to start developing a Machine Learning model. Unfortunately, in the real world what tends to happen is precisely the opposite and it is customary to have to manipulate droves of datasets containing missing values.

Usually, the first solution at hand seems to be to remove lines that report blank or NaN values. However, this choice can often be counterproductive, as it can result in the loss of valuable information, especially in cases where the dataset already has little historical depth. Therefore, given the frequency of occurrence and the weight of this issue on the performance of the results, it seemed appropriate to bring to the attention of the new ideas to be taken into consideration in the Preprocessing phase. In particular, a successful solution, as an alternative to classical approaches such as PCA (Principal Component Analysis) and Feature Selection, is the imputation of missing data, that is, obtaining the latter from known data.

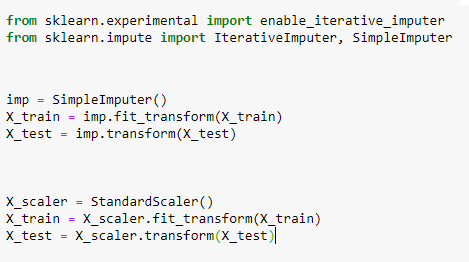

The “univariate” type imputation involves the completion of the missing values of a specific feature using the dimensions available to the feature itself. In Python, in these cases the SimpleImputer package of the sklearn.impute library is used, which by default provides for the imputation of the features by averaging the known data. Alternatively, you can set the mediana, the most_frequent (str) and constant (str) as the method.

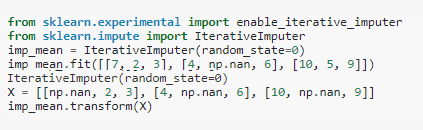

Finally, a further approach used for filling the dataset, of the “multivariate” type, foresees the features with missing values as a function of the other features by way of Round Robin type scheduling. In Python, the IterativeImputer is used for this approach, again from the sklearn.impute library.

The multivariate approach is certainly more sophisticated than the univariate one. However, both approaches, both SimpleImputer and IterativeImputer, can be used in a pipeline as a way to build a composite estimator that supports imputation.

Author: Francesca Giannella | Senior Data Scientist DMBI Photo by Marisa Morton on Unsplash